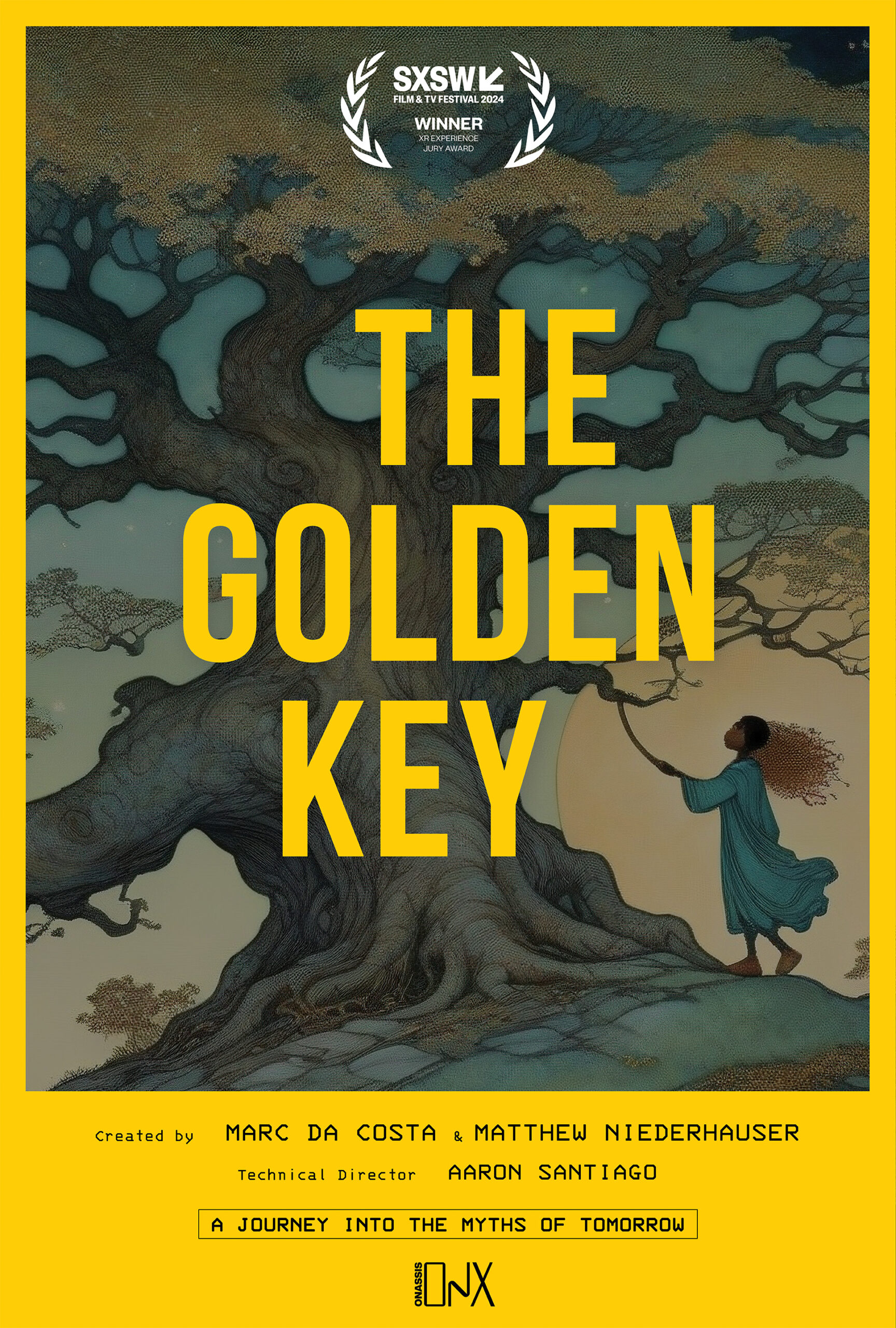

THE GOLDEN KEY

Creator & Producer

What power do myths have? Who gets to shape the myths of the future? “The Golden Key” brings audiences into contact with the mythological dreams of an AI as it writes and visualizes a never-ending story.

Imagining a future world that has suffered the worst ravages of climate change, “The Golden Key” invites participants to try to reconstruct a lost mythic past by responding to prompts at computer terminals on the exhibition floor. As the installation unfolds, an open-ended progression of narrative, sight and sound is generated while the work embarks on a process of recombinant storytelling, incorporating the audience’s words with an AI that has been trained on tens of thousands of folk tales from around the world, a dataset of narrative fragments that echo our most basic stories and concerns.

As a work of speculative mythology from a post-climate collapse future, “The Golden Key” allows audiences to encounter and intervene in the new narrative and myth-making powers imagined for AI. By encouraging interaction and play, it seeks to give participants agency in understanding how these tools work, the power to shape their outputs, and a way to imagine alternative futures together. While new technologies may threaten to take our stories and creativity away, this work seeks to disrupt and complicate the process, creating new myths in the process and fostering a sense of collective action through a shared creative act.

Emerging machine learning technologies give the spooky feeling of inferring or grasping at a world based on the input of an individual, but how do the input and output actually relate? How will they reinforce and build structures of meaning? How does a relationship build between different representations of code and humans? This call and response between a participant and machine learning model is where our work plays. “The Golden Key” does not ask whether this next generation of artificial intelligence is actually sentient, but rather whether we can dream with it and extend our conceptual boundaries within a new technological paradigm.

Created by Marc Da Costa and Matthew Niederhauser

Technical Direction by Aaron Santiago

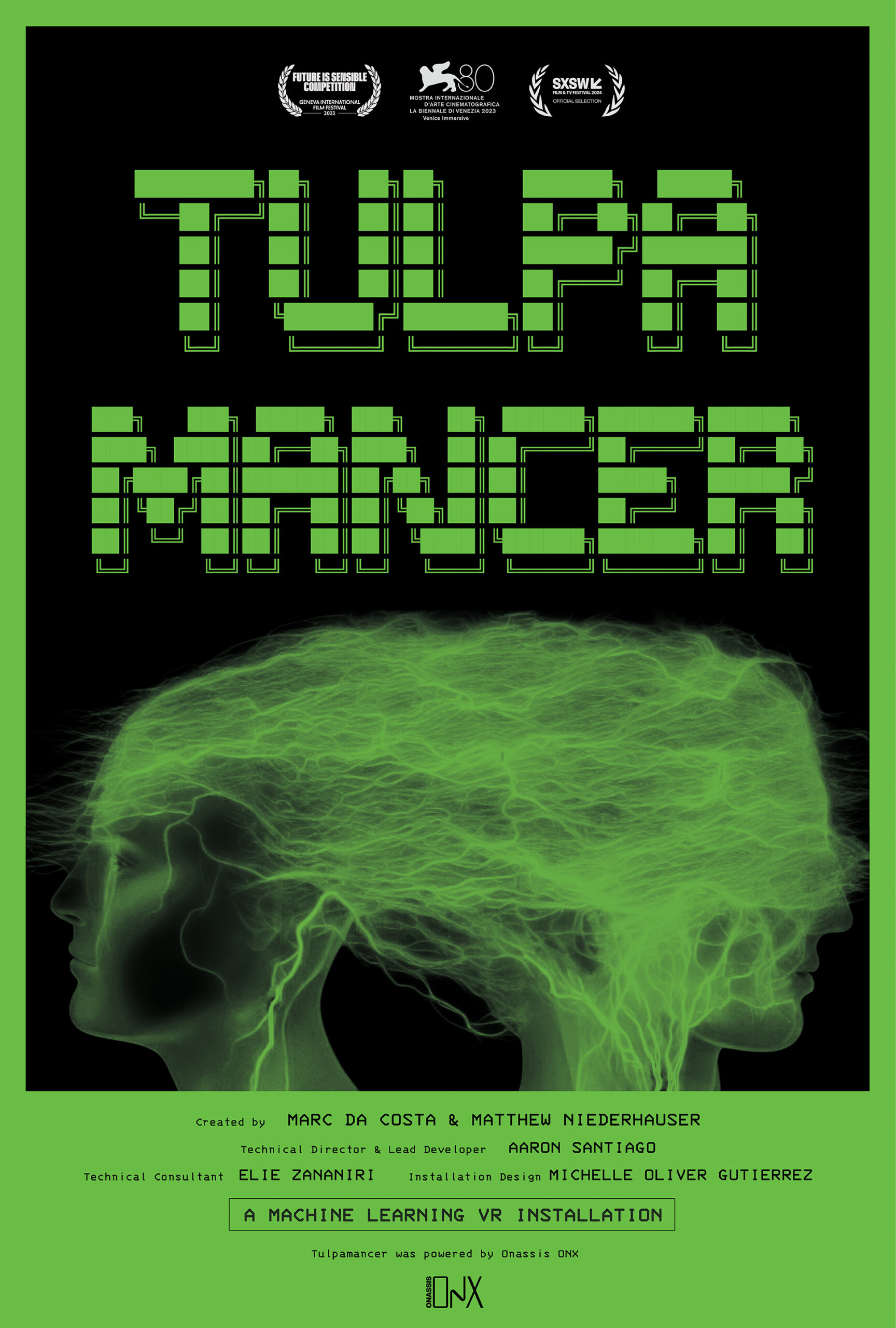

TULPAMANCER

Creator & Producer

“Tulpamancer” is a machine learning VR installation that shapes a dreamlike, immersive encounter with the memories and possible futures of each participant. Inspired by the concept of a tulpa, a being which begins in the imagination but then develops its own presence and reality, the experience explores how the intersection of generative AI and VR can shape new ways of encountering ourselves.

Sitting down at a retro computer terminal, participants first encounter the tulpa through a series of questions about their own lives. Designed in consultation with large language models and shamans, the questions will prompt participants to revisit their childhood, major life moments, and even short term memories of what happened earlier in the day. After typing out their responses, they are then invited to meet in a world prepared uniquely for them.

Participants then put on a VR headset and are led on a journey by their tulpa through a series of uniquely generated virtual scenes and voiceovers that invoke and question the memories of their own past and their potential fates. Ultimately, every interaction produces a unique work that is deleted at the conclusion of its viewing, left only to resonate in the minds of each participant.

What does it mean to share your life with a machine? The tulpa – an idea rooted in Tibetan Buddhism but popularized by the theosophical thinker Annie Bessant (1847-1933) – refers to the physical manifestation of thought through spiritual practice and intense concentration. A transformation in society now appears to loom with the emergence of AI, a technology that derives its power by concentrating intensely on enormous volumes of online text and images – the digital traces of our collective unconscious. The tulpa was a way of making sense of and providing alternative frames for modernity. This work thus provides participants an occasion to explore alternative histories and futures for AI.

Emerging machine learning technologies give the spooky feeling of inferring or grasping at a world based on the input of an individual. But how do the input and output actually relate? This call and response between a participant and machine learning model is where “Tulpamancer” plays, asking not whether this next generation of artificial intelligence is actually sentient, but rather whether we can dream with it.

Created by Marc Da Costa and Matthew Niederhauser

Technical Direction by Aaron Santiago

PARALLELS

Creator & Producer

“Parallels” is a site-specific, responsive machine-learning installation, which transforms a large LED wall into a portal for visitors to meet the world and themselves through the lens of a neural network. The work enables a visceral and unmediated experience of the way in which machine vision discerns the environment through live digital decoding of a changing landscape, dreaming and reframing it in a constant conversation with those who encounter it.

The installation particularly seeks to recontextualize emerging machine learning technologies in the natural landscape, questioning how new forms of artificial knowledge are changing our perception of the world around us. The installation thus draws the sphere of generative AI into conversation with the landscape and the historical embeddedness of the viewer. How do we look when reimagined by statistical alchemy unleashed on the collective legacy of billions of online images?

“Parallels” was first conceived as part of Plásmata II for the Onassis Foundation and consisted of a large LED wall along the Ioaninna promenade with a view across Lake Pamvotis in Greece. A camera mounted behind it captured the vista and created a seamless live digital rendition of the scene as construed through a variety of generative AI models. This parallel view of the world was influenced by input provided by the audience passing in front of the camera as well as other environmental factors like light and sound levels.

Parallels was most recently shown at the Pérez Art Museum Miami and Frost Science Museum as part of the Filmgate Miami Immersive Festival where it won the Best of Tech award for its innovative use of generative machine learning tools. The aesthetics of the installation were adapted to the locality in order to reimagine these cultural institutions and the surrounding urban landscape through the eyes of a neural network. Each activation of Parallels is site specific, with the visual styles reflecting the cultural and historical context of its environment.

Created by Marc Da Costa and Matthew Niederhauser

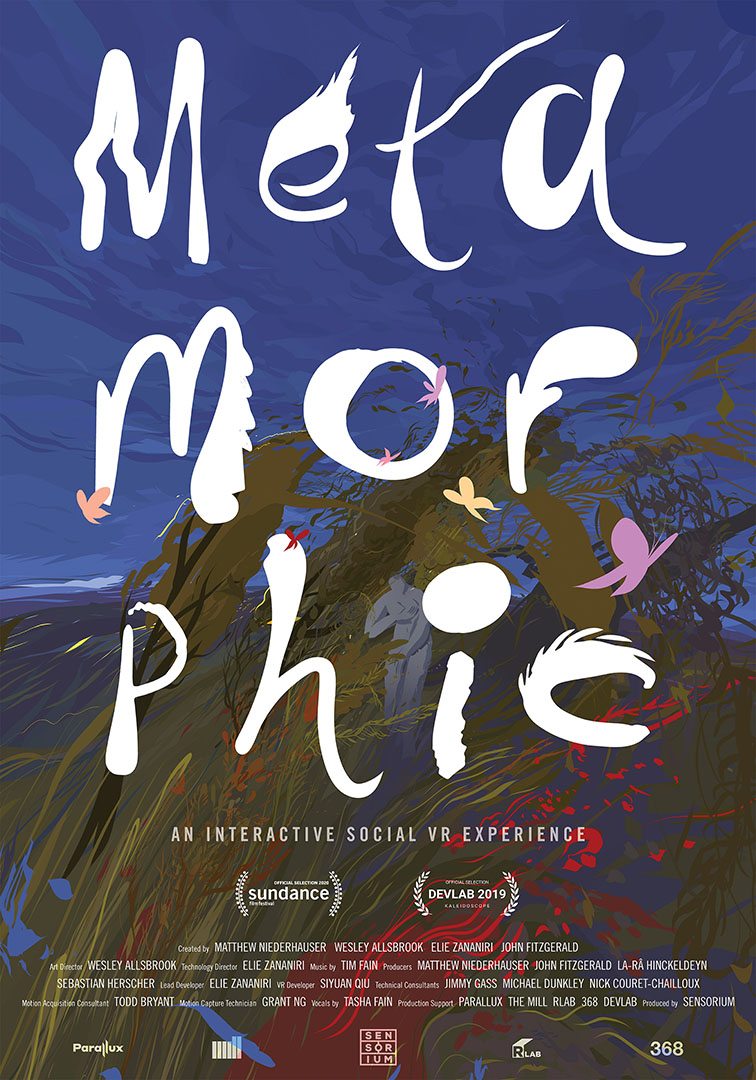

METAMORPHIC

Creator & Producer

“Metamorphic” is a social VR experience where participants explore the ephemeral nature of the self. It creates opportunities to connect, opening up possibilities for participants to understand their bodies as vehicles for change. Movement and play shape appearances through a series of majestically drawn worlds. This project also aims to encourage dialogue around what it means to respect others in a space with less context than the real world or the Internet.

VR is a medium particularly suited to the exploration of embodiment and identity. The mechanics of “Metamorphic” are designed to create an environment where participants can interact with each other with no initial point of reference, then playfully amend their world through interactivity. The experience can instill a sense of wonder and possibility for how people might explore future virtual worlds and come to understand their visual identities as fluid. Collective virtual spaces are already becoming popular, but they should expand our sense of self rather than curtail it.

Aside from imagining “Metamorphic” as a location-based installation, the creators also hope to expand the project, so that avatars and the environments participants inhabit may be created by many artists with singular voices. The world premiere at Sundance New Frontier was an open call for collaborators who also work in digital mediums. “Metamorphic” explores new territory not only through its interactive design and experiential arc, but also establishes a unique platform for artistic interchange and personal exploration.

Created by Matthew Niederhauser, Wesley Allsbrook, Elie Zananiri, and John Fitzgerald

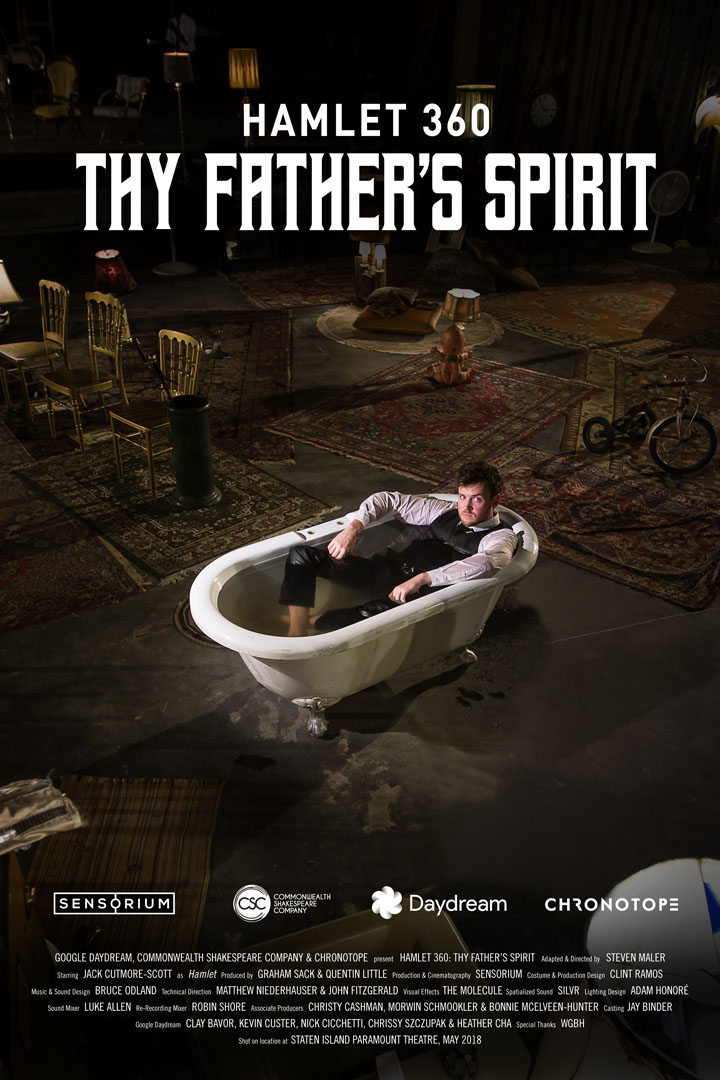

HAMLET 360: THY FATHER’S SPIRIT

Technical Director & Cinematographer & Editor

“Hamlet 360: Thy Father’s Spirit” brings Shakespeare’s most iconic play to vivid life, harnessing the power of virtual reality to take viewers deep within the masterpiece through Hamlet’s harrowing journey. The 60 minute, cinematic, 360-degree adaptation also explores new dimensions in the performing arts by casting the viewer as the Ghost of Hamlet’s dead father, giving the viewer a sense of agency and urgency as an omniscient observer, guide and participant. Every moment embraces the immersive power of the medium.

The experience is set in a dilapidated, once-glorious hall – the same room where the fateful violent duel takes place. Paint peels off the walls, water drips from the ceiling, ruined furniture gathers dust. Shards of Hamlet’s memories—the faded detritus of his life—are spread throughout the room. On one end, a stage; to the side, a large claw foot tub; and just above, a mound of earth with a few tombstones and a fresh rough-hewn grave.

The production design embraces the interiority of the play and immerses the characters (and the viewer) in Hamlet’s chaotic, turbulent mindscape. This dream world is both hyper-real and tangible as well as surreal and expressionistic. It’s a rich and detailed environment that invites continual exploration and discovery. The experience was adapted and directed by Commonwealth Shakespeare Company’s Founding Artistic Director Steven Maler with the support of Google and released in partnership with Boston public media producer WGBH.

Adaption & Direction: Steven Maler

Producers: Graham Sack & Quentin Little

Production & Cinematography: Sensorium

Costume & Production Design: Clint Ramos

Music & Sound Design: Bruce Odland

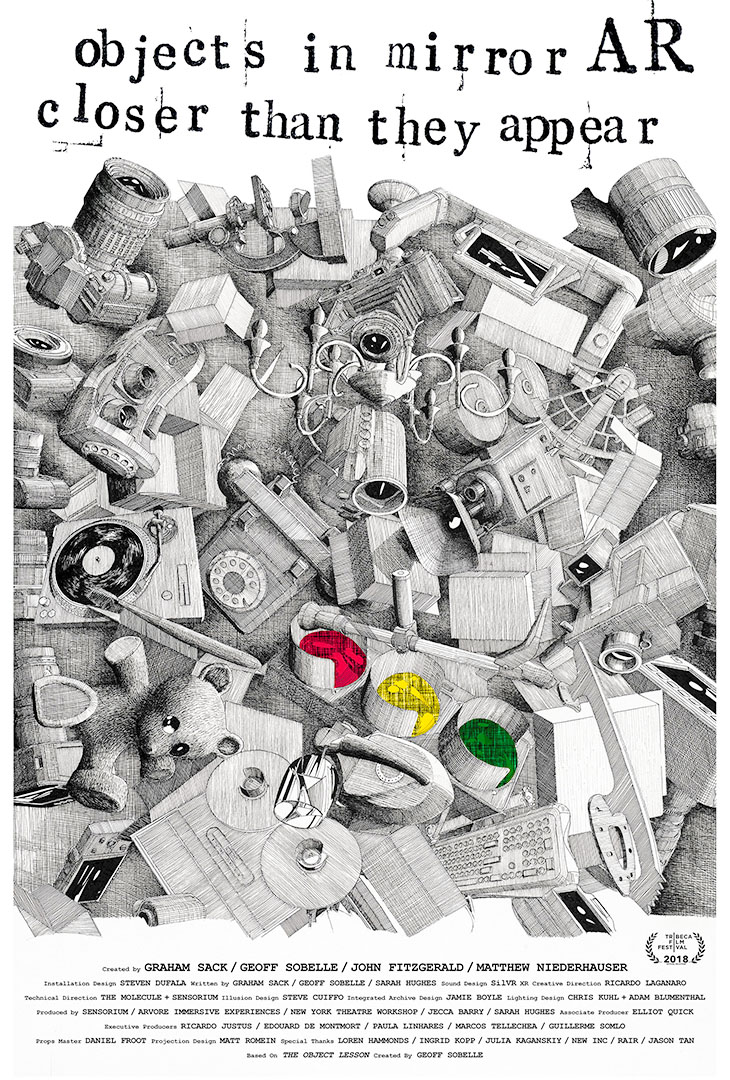

objects in mirror AR closer than they appear

Creator & Technical Director

“objects in mirror AR closer than they appear” is a new media installation that destabilizes the distinction between virtual and physical objects and creates a philosophical playground to explore the shifting relationship between images, memories, and things. The project acts as a window into inner worlds, portals that transport us into memory, nostalgia, and absurdist fantasy all while exploring how technology can make this ephemeral experience visceral and immediate to the senses.

The advent and adoption of virtual, augmented, and mixed reality technologies promises to radically destabilize our traditional distinction between virtual and physical objects. “objects in mirror AR closer than they appear” combines augmented reality technology with an immersive theater installation to explore this liminal space, inviting audiences to reflect on the relationship between new media and archaic objects; 21st century technology and 19th century magic; memory and optical illusion.

The project is an archaeological dig: the site is the everyday heap of objects that surround each and every one of us — a massive, overwhelming pile of junk that describes in debris each of our personal histories. The installation becomes activated through chance, an audience’s curiosity, and their willingness to go along. By the end, the experience is orchestrated chaos — lines between fact/fiction, yours/theirs, installation/performance, subject/object are all undone.

Created by Graham Sack, Geoff Sobelle, John Fitzgerald, and Matthew Niederhauser

Installation Design by Steven Dufala

XR Creative Direction by Ricardo Laganaro

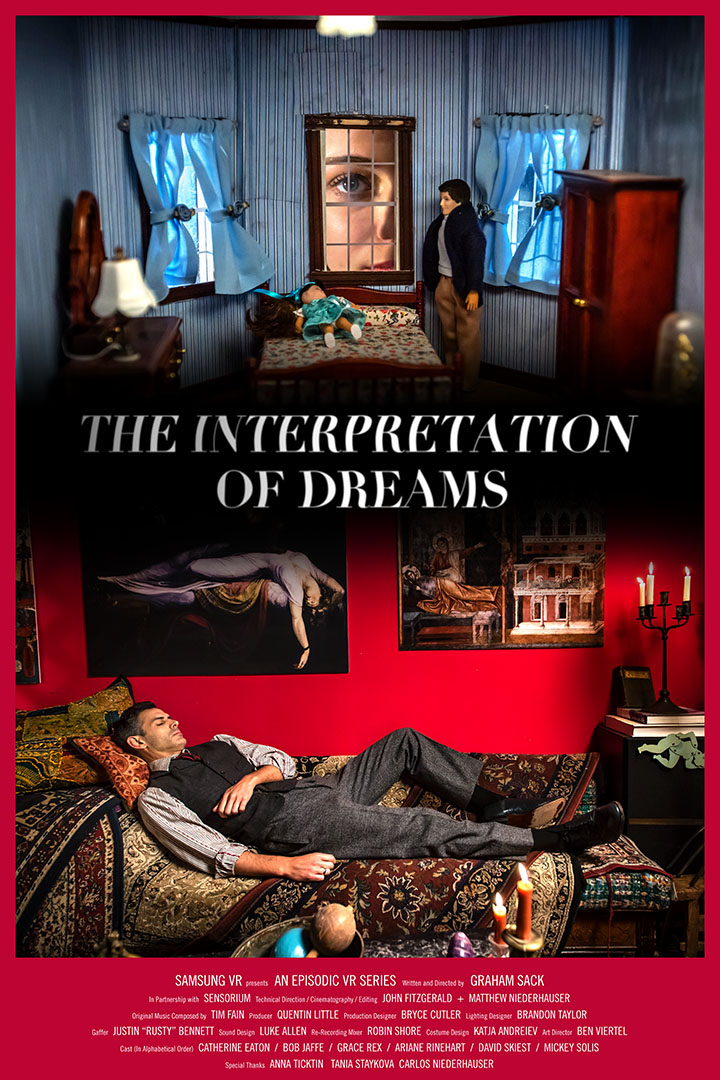

INTERPRETATION OF DREAMS

Technical Director & Cinematographer

In 1899, Freud published his magnum opus, The Interpretation of Dreams, which shocked the world and forever changed our understanding of dreams and the unconscious mind. This episodic narrative fiction series reimagines each of Freud’s original case studies — “The Ratman”, “Dora”, “Anna O”, “Irma’s Injection” — as emotionally haunting immersive VR dreamscapes. Each episode unfolds as a mystery to be solved. As Freud wrote, “Dreams are the royal road to the unconscious.”

Those dreamscapes include dank, candle-lit basements, faceless men, a dollhouse inferno, and anxious shifts in perspective that put the viewer in the perspective of the dreamer. Much of the series is staged in delapidated spaces that are on the verge of falling apart. Long-abandoned structures, exposed brick walls and paint peeled up from surfaces are the backdrop framed by art director Bryce Cutler and lighting designer Brandon Taylor. With masterful aesthetics, the series immerses audiences in a canvas for troubled minds.

Federico Fellini famously called film “a dream we dream with our eyes open.” Filmmakers from Tarkovsky to Lynch to Nolan have used cinema to explore dreamscapes. How much more is now made possible by the full sensory immersion of virtual reality? The medium is uniquely well-suited to the representation of dreams, providing a vast new vocabulary for the exploration and visualization of the unconscious, from the construction of surreal landscapes; to the distortion of time, space, perception, and physical law.

Written & Directed by Graham Sack

Technical Direction & Cinematography by Sensorium

Original Compositions by Tim Fain

ZIKR: A SUFI REVIVAL

Creator & Cinematographer

“Zikr: A Sufi Revival” is a 17-minute interactive social VR experience that uses song and dance to transport four participants into ecstatic Sufi rituals. It also explores the motivations behind followers of this mystical Islamic tradition, still observed by millions around the world. Sufism is a branch of Islam that is often cast as esoteric. But in Tunisia, Sufism is deeply bound to national heritage and popular culture, especially through music. In the aftermath of the Arab Spring, Tunisia is also looking for Sufism as a viable and more individualistic alternative to conservative Salafi movements.

The social component of this experience allowed four participants to see each other and effect each others’ environment through a rope of prayer beads. Participants also interact with each other through dance and other objects that alter the experience itself. “Zikr: A Sufi Revival” needs to be shared between people. The installation also include antechambers decorated with art to prepare participants for the rituals.

Understanding Sufism, by its very nature, is experiential. “Zikr: A Sufi Revival” takes four participants on an interactive journey into the world of ecstatic ritual, dance and music, in order to explore the nature of faith alongside followers of this mystical Islamic tradition. Singing and dancing alongside Tunisian Sufis, you can activate visual and experiential features through your movement. With this physical interaction with Sufi traditions and interviews with its practitioners, the experience aims to introduce new facets of Islam, revealing a practice of inclusion, acceptance, art, joy and understanding.

Created by Gabo Arora, John Fitzgerald, and Matthew Niederhauser

Produced by Sensorium, Superbright, and Tomorrow Never Knows

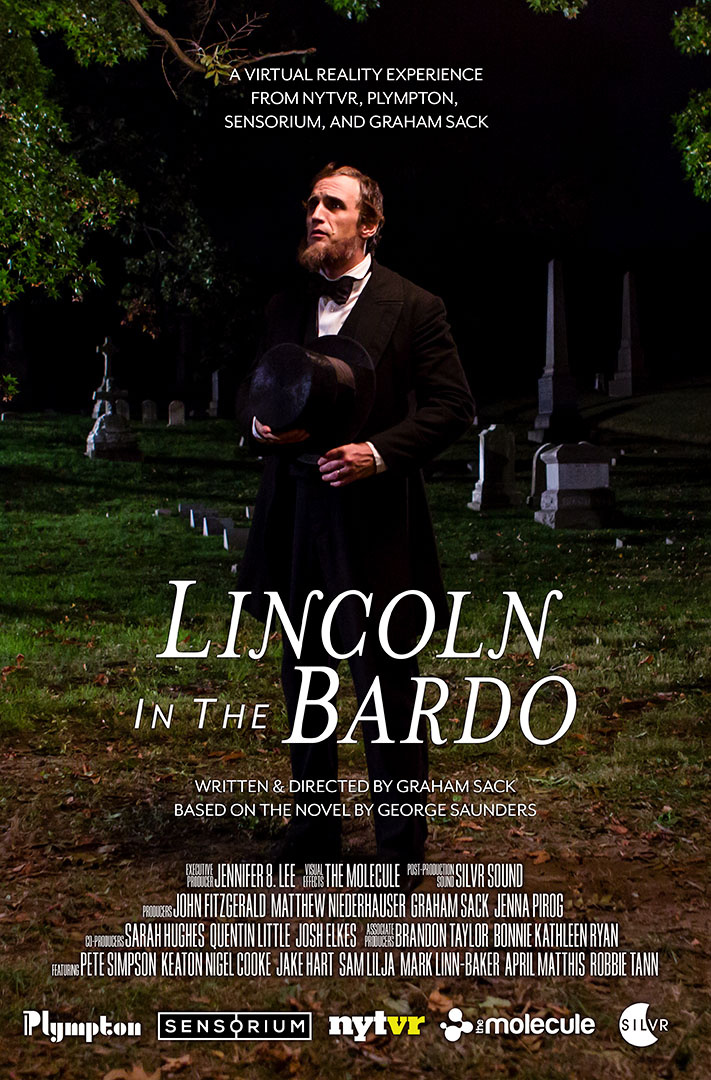

LINCOLN IN THE BARDO

Technical Director & Cinematographer

On February 22, 1862, Abraham Lincoln’s beloved son Willie is laid to rest in a marble crypt in a Georgetown cemetery. That very night, in the midst of the Civil War, Abraham Lincoln arrives at the cemetery shattered by grief and, under cover of darkness, visits the crypt to spend time with his son’s body. Set over the course of that one night and narrated by a chorus of ghosts of the recently passed and the long dead, “Lincoln in the Bardo” is a thrilling exploration of death, grief, and the powers of good and evil.

The experience places the viewer in the perspective of a dead soul, newly arrived in a cemetery. Another new arrival – Willie Lincoln – is there as well. He is a little gentlemen, a sickly child, who is frightened and searching for his father. The viewer is then “welcomed” by a series of dead spirits, each of whom delivers a monologue about their lives. The spirits are unwilling to admit that they’re dead, insisting that they will soon recover and return to their past lives. With the entire weight of evidence against them, the only way that these dead souls can maintain the fiction that they are merely ill and will recover is by constantly retelling monologues about their lives, which they neurotically recite to the viewer.

The experience was written and directed by Graham Sack based on the bestselling novel by acclaimed author George Saunders, which debuted at #1 on the New York Times bestseller list and won the Booker Prize. The experience is the first-ever direct adaptation of a novel into virtual reality and was produced by NYT VR, Plympton, Sensorium, and Graham Sack, with collaboration from Penguin Random House. The Molecule created the visual effects with spatialized audio by SilVR Sound.

Written & Directed by Graham Sack

Technical Direction & Cinematography by Sensorium

Original Compositions by SilVR Sound